Why DataToInsights Wins in Self Serve Analytics?

**Summary**

Self-service analytics should shorten the distance between a business question and a trustworthy answer. Most teams miss that mark because they bolt a chat UI on top of messy data and call it a day.

This guide lays out what self-service actually is, the traps that kill adoption, and a concrete blueprint to make it work governed, explainable, and fast. I’ll also show how DataToInsights implements this blueprint end-to-end with agentic pipelines, a semantic layer, and verifiable SQL and lineage so non-technical users can move from raw files to reliable decisions without camping in a BI backlog.

**What is Self-Service Analytics mean?**

The ability for non-technical operators (finance, ops, CX, revenue, supply chain) to ask a business question in plain language and receive a governed, explainable answer with evidence and without waiting on IT/BI team.

The core promise: speed × trust. If you only have one without the other, it’s not self-service , it’s shadow IT or pretty dashboards.

**Why Self-Service Often Fails?**

- Messy inputs: files, exports, and siloed systems with inconsistent rules.

- No semantic contract: metrics mean different things across teams.

- Chat ≠ context: LLMs hallucinate when lineage and data quality are unknown.

- Governance afterthought: access, PII, and audit left to “we’ll add later.”

- BI backlogs: every new question becomes a ticket; momentum dies.

**A. Practical Framework that Works**

**1) Ingest & Normalize:**

Bring in files, databases, SaaS sources. Standardize schemas, types, and keys.

**2) Quality Gate (pass/fix/explain):**

Automated checks for nulls, duplicates, drift, outliers, valid ranges, referential integrity. If something fails, suggest fixes or auto-repair with approvals.

**3) Business Rules → Semantic Layer:**

Codify definitions once: revenue, active customer, churn, margin logic, time buckets, SCD handling. Publish as governed metrics.

**4) Context Graph:**

Map entities (customer, order, SKU, ticket) and relationships. Attach glossary, policy, owners, and lineage.

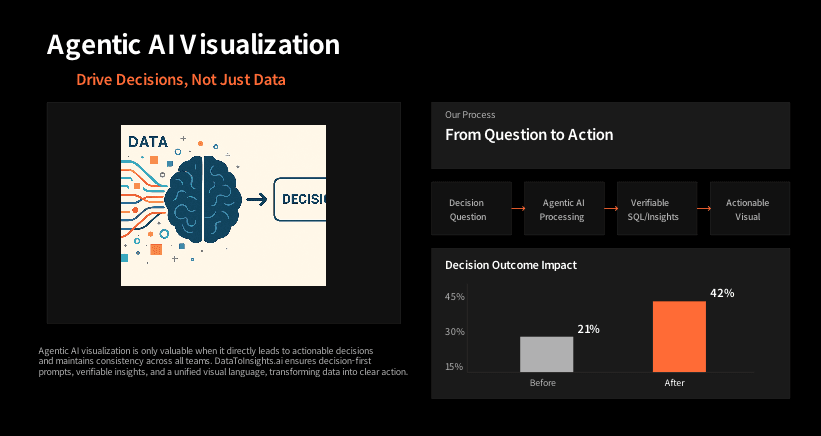

**5) Agentic Answering with Evidence:** Natural-language Q → verifiable SQL on governed sources → answer + confidence + links to lineage, tests, and owners.

**6) Distribution Inside Workflows:**

Embed in the tools teams live in (Sheets, Slack, CRM, ticketing), schedule alerts, and push ready-to-act packets (not just charts).

**7) Telemetry & Guardrails:** Track who asked what, which metrics were used, result freshness, and where answers created downstream action.

**Pros, Cons, and How to Mitigate**

_**Pros**_

- Faster cycle times from question → action

- Fewer BI tickets; more strategic engineering

- Shared language for metrics; fewer “dueling dashboards”

- Better auditability and compliance

_**Cons & Mitigations**_

- Misinterpretation → show SQL, lineage, and business definition next to every answer.

- Data drift → continuous tests + drift monitors + alerts.

- Policy risk → role-based access that flows from the semantic layer.

- Tool over-reliance → embed owners, notes, and examples with each metric; keep humans in the loop for fixes.

**Best Practices That Actually Move the Needle**

1. Question-first design: start with top 20 recurring questions by role.

2. Contracts before charts: metric definitions, owners, SLAs.

3. Declarative tests: nulls, uniqueness, ranges, reference lists, volume and schema drift.

4. Explainability by default: SQL, lineage, freshness, and pass/fail checks adjacent to the answer.

5. Right to repair: propose and apply data fixes, track approvals.

6. Embed where work happens: CRM, finance apps, helpdesk, Notion, Slack.

7. Measure impact: time-to-insight, avoided rework, decision latency, $$ outcomes.

**What to Look For in a Self-Service Platform**

1. Agentic pipelines that prepare data (not just query it).

2. Semantic/metrics layer with versioning and RBAC.

3. Knowledge/lineage graph tied to every metric and answer.

4. Verifiable SQL behind every response—no black boxes.

5. Analytics-as-code (git, CI, environments, tests).

6. Data quality automation with repair suggestions and approvals.

7. Warehouse-native performance (Snowflake, Postgres, etc.).

8. Embeddability (SDK/API) and alerting.

9. Audit & compliance built in (PII policies, usage logs).

**Why DataToInsights is the Best Choice?**

Built for operators, not demos. DataToInsights is a Vertical-Agnostic Agentic Data OS that takes you from raw inputs to governed answers with receipts.

**What you get day one?**

- Ingestion & Normalization: files (CSV/XLS/XLSB), DBs, and SaaS connectors.

- Auto DQ Gate: 20+ universal checks (nulls, dupes, ranges, drift, schema) with auto-repair options and approval workflow.

- Semantic Layer: consistent metrics, time logic, and currency handling, versioned and role-aware.

- Context & Lineage Graph: entities, relationships, ownership, and end-to-end lineage rendered for every answer.

- Agentic Copilot: NL questions → verifiable SQL + explanation + confidence; no vibes.

- Analytics-as-Code: git-native changes, CI checks, dbt-friendly, environments, and rollbacks.

- Embeds & Alerts: push insights into Slack, email, Sheets; embed widgets in internal tools.

- Warehouse-native: runs close to your data (Snowflake/Postgres), no lock-in.

**How it’s different?**

- Answers with evidence: every response shows SQL, tables touched, tests passed, and metric definitions.

- Fix the data, not just the chart: when checks fail, our agent proposes specific transforms (dedupe, type cast, standardize codes) and can apply them with audit.

- Playbooks that ship: finance, CPG, operations, CX—starter question sets, metrics, and policies you can adopt and edit.

- Governance woven in: RBAC, PII policies, metric ownership, and audit logs are first-class—not an afterthought add-on.

**Outcomes teams report?**

- 70–90% fewer BI tickets for recurring questions

- Minutes (not weeks) to get a governed answer

- Measurable reduction in decision latency and rework

- Higher trust: one definition of revenue/churn/COGS across the org