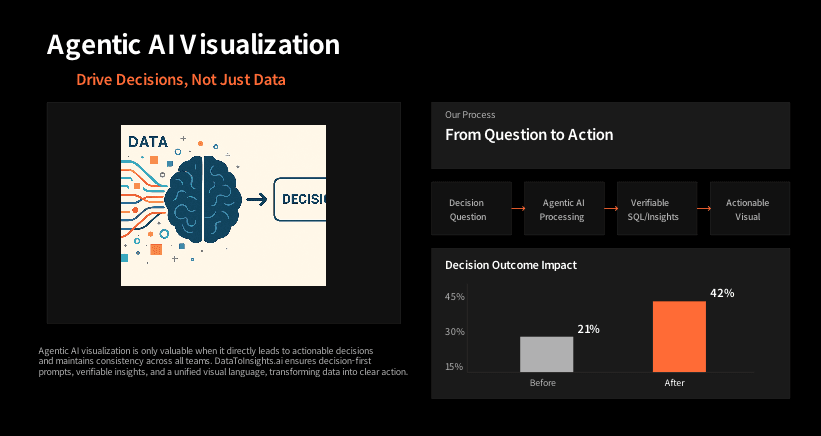

In the age of AI-driven analytics, many organisations are seduced by the idea of “just plug an LLM to your warehouse and ask anything”. Most teams do not pay attention to the massive engineering effort required to make the conversational analytics work in production, at scale and with real enterprise data.

To succeed in production, you need more than a chat interface — you need an architecture built to understand semantics, learn from usage, secure retrieval, and enforce governance. In this post we’ll walk you through a blueprint for such a platform, anchored around three key layers:

- A Custom Data Understanding Layer that interprets structure, semantics, and business use-cases

- A Learning & Retrieval Layer that evolves and retrieves context-aware information

- A Secured Retrieval & Execution Stage that ensures safe, performant, governed answers

- We’ll also highlight why these capabilities matter, what pitfalls to avoid, and how to build each layer effectively.

Why Standard Architectures Fall Short

Many organisations try to build agentic analytics by simply connecting an LLM to their data lake. And the the shortcomings follow.

- Semantic mismatches: Data schemas, business definitions, domain-terminology differ from how users ask questions. -** Ambiguous user intent:** Users ask “top customers by region” — which metric? Which region? Which time period? -Lack of deterministic planning & governance: Relying purely on LLM output means inconsistent results, hidden logic and poor auditability.

- Performance & scale issues: Real-world data volumes, multi-source integration, complex queries — these break toy demos.

- Security and compliance gaps: Enterprise systems demand role-based access, audit logs, data masking, safe queries — not always built-in.

Building a platform that works in production means addressing these challenges head-on. That’s why we propose a layered architecture.

Our Architecture Blueprint

Here’s how we propose to structure the platform:

1. Custom Data Understanding Layer This is the foundation. It captures the structure, semantics, and business logic of your data estate.

Key components:

- Schema metadata registry: All tables, columns, relationships, data types, update frequencies.

- Business ontology & semantic model: Define entities (e.g., Customer, Product, Region), dimensions (Time, Geography), metrics (Revenue, Active Users) and their business definitions.

- Synonyms & domain language mapping: Map how users say things (“my area”, “territory”, “geo”) to semantic entities.

- Business-case catalog: Pre-defined use-cases (e.g., “why did churn increase?”, “top accounts by growth”) with context around metrics, time-frames, dimensions.

- Data quality and access metadata: Flags for freshness, reliability, and governance (who can access what, what’s masked).

Why this matters: Without this layer your agents will misinterpret user language, use wrong joins, mis-select metrics, and generate untrusted results. Tellius emphasises that building a semantic layer is non-optional for enterprise agentic analytics. (Tellius)

Implementation tip: Start small (one business domain) and iterate the ontology. Provide a searchable dictionary for users. Ensure the layer drives autocomplete and user interaction.

2. Learning & Retrieval Layer Once you have the semantic base, you need the system to learn from interactions and retrieve context-aware content. **Key components: **

- User intent parser: Parses query types (descriptive, diagnostic, predictive, prescriptive) and extracts entities/timeframes/filters. Inspired by Tellius’s “planner” step. (Tellius)

- Contextual memory & session history: Maintain conversational context so follow-up questions (“What about Q4?”) are understood.

- Learning module / feedback loop: Tracks which responses users accepted, corrected, or ignored; refines synonyms, mappings, plan defaults.

- Retrieval module: Maps parsed intent + semantic layer → candidate data sources, business views, metrics; includes ranking/selection of best view.

- Plan generator: Creates a typed execution plan (AST) that orchestrates data retrieval, analytics, transforms, joins, filters — before any SQL or execution.

- Validator: Ensures the plan aligns with business rules, semantic definitions, governance policies, and rejects unsafe queries.

Why this layer matters: This layer ensures the system learns and adapts, retrieves the right business view, and extracts relevant data for the analytics engine. It bridges user intent and data execution.

3. Secured Retrieval & Execution Stage

At this stage the system runs the plan securely, efficiently, and produces the answer + narrative, with transparency and governance baked in. Key components:

- Access control & policy enforcement: Role-based access, column/row masking, audit logs, query budget limits.

- Query compilation & optimization: Translate plan into optimized SQL (or appropriate dialect) with partition pruning, caching, reuse of results.

- Execution engine: Could be your warehouse (Snowflake, BigQuery etc) or specialized analytics engine. Should deliver deterministic, mathematically correct results. Tellius emphasises: “LLMs are not sufficient for deterministic analytics.” (Tellius)

- Narrative & explanation generator: Generate human-friendly explanation of results, include definitions, assumptions, lineage, and potential caveats.

- Monitoring, observability & audit trail: Track latency, bytes scanned, cache hits, failures, versioning of semantic models, drift detection. -Fallback and retry logic: Handle missing data, ambiguous queries, system failures gracefully (ask user clarifications, suggest alternatives).

Why this matters: Execution is where the rubber meets the road. You must guarantee performance, correctness, security, and traceability — otherwise users will not trust the system.

Typical Pitfalls to Avoid

- Treating the LLM as the whole solution: Without semantic grounding and deterministic execution you risk hallucinations, inconsistent results.

- Skipping user language elicitation: If you don’t model how users ask questions — you end up with synonyms and mappings that don’t align.

- Neglecting performance & cost: If queries scan entire warehouses unnecessarily, latency grows and cost explodes.

- Ignoring governance: Without controls you risk exposing sensitive data or giving incorrect results.

- Trying to support all domains at once: A “big bang” multi-domain launch increases complexity — start focused, scale fast.

- Lack of transparency: Users must see how answers are derived (definitions, assumptions, lineage). Without this, they won’t trust the system.

Conclusion

Building an enterprise-grade agentic analytics platform is not a simple plug-and-play job. It requires deliberate architecture, semantic modelling, learning loops, secured execution, and above all, trust. As Tellius puts it: “The next time you see a flashy AI demo … ask yourself: Can it handle 100+ tables? Terabytes of data? Dialect-aware SQL? Guarantee security and prevent hallucinations?”

With our proposed architecture — Custom Data Understanding Layer → Learning & Retrieval Layer → Secured Retrieval & Execution Stage — you’re equipped to build analytics agents that move beyond novelty and deliver real business value. The key is not just “ask your data” but “understand, learn, retrieve, secure, explain”.

Start focused, iterate fast, measure continually — and you’ll shift from “analytics demo” to “analytics mission critical”.

Ready to design or evaluate your agentic analytics architecture? Contact us for a workshop, architecture review or pilot implementation.

Key Takeaway

- Enterprise-grade agentic analytics requires more than “chat with your data” — it needs a layered architecture designed for trust, governance, and scalability.

- The platform blueprint includes three essential layers:

- Custom Data Understanding Layer — defines schema, semantics, business logic, and user language mappings.

- Learning & Retrieval Layer — interprets intent, retrieves contextually relevant data, and improves through feedback.

- Secured Retrieval & Execution Stage — enforces access control, optimizes performance, ensures deterministic results, and provides full auditability.

- Semantic grounding prevents hallucinations and inconsistent outputs, ensuring analytics agents understand business meaning.

- Learning loops and telemetry enable continuous refinement of user intent, synonyms, and data mappings.

- Security and governance are non-negotiable — implement role-based access, query validation, and audit trails from day one.

- Start small (one domain), version your semantic models, and iterate quickly to build trust and scale effectively.

- Transparency matters — always show users how results were derived (definitions, lineage, assumptions).

datatoinsights.ai empowers organizations to move beyond demos — enabling trusted, semantic, and secure agentic analytics ready for enterprise production.